Gamepad support in Unity. It looks deceptively easy, if you look at the Input API. Just a collection of buttons and axes, Right?

Of course, the devil is in the details. You can go ahead, get your button mappings set up using your 360 controller or your PS4 controller or your Logitech or what-have-you. Run it, and it will all work great. On your gamepad. On your OS. The minute your game goes to someone who has a different brand of gamepad, or sometimes even a different OS, your gamepad support breaks. Up and down on the right analog suddenly rotate the camera left and right instead, none of the face buttons work, and other controls are similarly mismatched. Why? Because no one in the game controller industry can seem to agree which button is the first button, which axis number should correspond to up and down on the left analog stick, etc. The situation is especially bad with XBox-family controllers, with these correspondences being different on all 3 major OS’s.

Of course, you can make players go through a one-time controller set-up, a digital game of Marco Polo where the game asks you to press a particular button and then listens to figure out which button that maps to on your controller. But, that is not really an acceptable user experience today.

Imagine if we still had to write different code to accommodate different graphics cards, like we did with the first generation of 3D accelerators. It would be a total nightmare! Yet, this is pretty much the nightmare that we face when trying to support game controllers. Very early on, the concept of the Hardware Abstraction Layer (HAL) was applied to 3D graphics accelerators, so that, to our code, all accelerators looked and worked the same (save for differences in capabilities). These days, rarely do we have to even think about whether a user is running with an nVidia or AMD/ATI graphics adapter.

Unfortunately, no such animal exists for gamepads at the system level, at least not to the degree that we can ask a system-level library like DirectX, “Give me the status of the left analog stick’s Y-Axis”, and have it mean the same consistent thing across devices. The closest thing we have is the USB HID specification, but it is really made for very generic input cases, and specifically does not solve the problem of establishing consistent button/axis mappings across devices.

So instead, we are forced to build databases of controller configurations, and build (or buy) our own abstraction layers that hopefully support every controller under the sun, knowing that somewhere, someone has a controller that has not been accounted for and is incompatible.

Unity also does not help much in this regard, which is a shame. A well-documented lack of run-time configurability of inputs (as recent as 5.2) all but assures that serious game development projects must come to a point where they need to build or buy a replacement to the built-in input system.

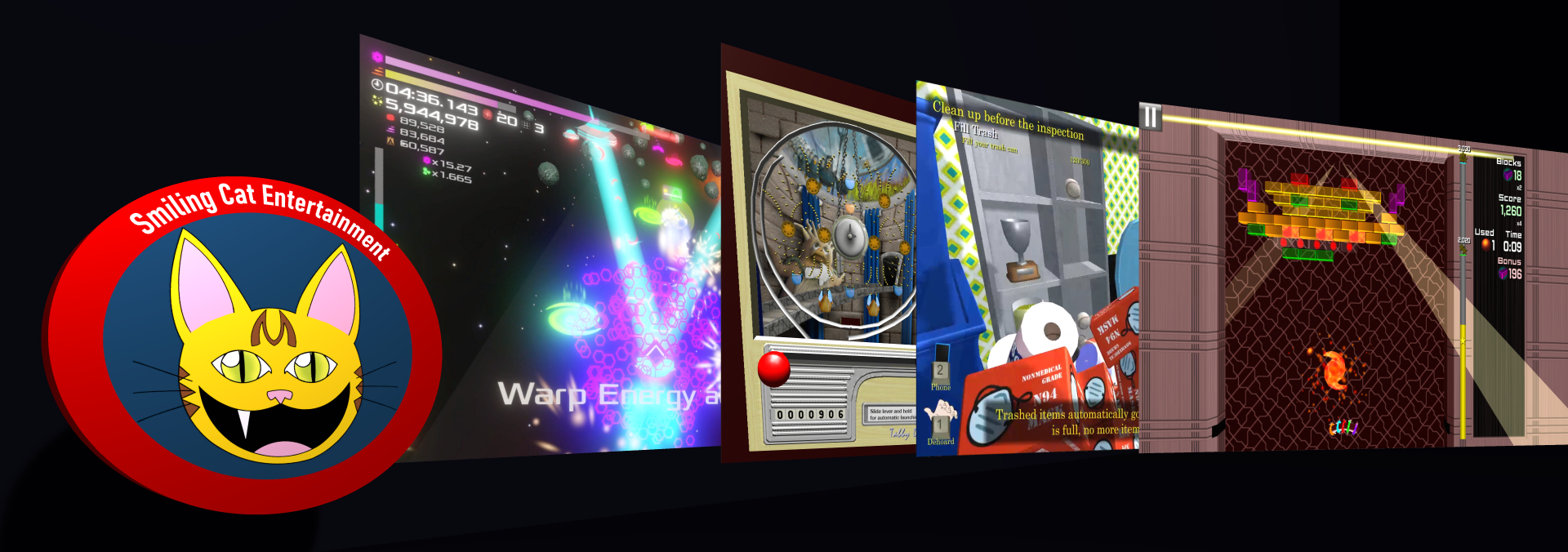

For purposes of Dehoarder 2 gamepad support, I’m going to try the InControl component from Gallant Games. It seems to integrate the best, even allowing me to hook in to the newer uGUI event system, so that I can use built-in Unity functionality like controller-based menu navigation.

I could have tried building something like InControl myself, though it would have taken me dozens, if not hundreds of hours to get it working right. Plus, I’ve only ever had experience doing such code on the PC, not OSX or Linux platforms. All of that mess, vs. $35 for something that has been well-polished and likely supports more scenarios than I’d ever need. Easy decision.

I’m still trying to find a good way around paying $65 for a library that allows me to inject icons (like controller buttons) into my text, though…